Following on from Richard Johnson’s blog post “Inventive AI: can machines innovate?” we now focus in on machine learning.

Machine learning has taken the world by storm. Originally a relatively obscure subfield of artificial intelligence research in the late 1950s and early 1960s, machine learning has risen to prominence in modern technology with far-ranging applications from the use of predictive algorithms to analyse huge quantities of genetic data to apps like Siri and Alexa in our homes.

Some questions for inventors looking to implement this exciting development in computing are quite clear:

- What is machine learning?

- Should I use machine learning?

- Can I get protection for my new machine learning algorithm and how do I do it?

With so much to unpack with just three questions, we’re going to tackle each problem in turn in a series of blog posts centred on machine learning.

What is Machine Learning?

Put simply, machine learning is the overarching term that covers a broad class of algorithms that are designed to improve with experience, i.e. the algorithm causes a computer to mimic a human’s capacity to learn.

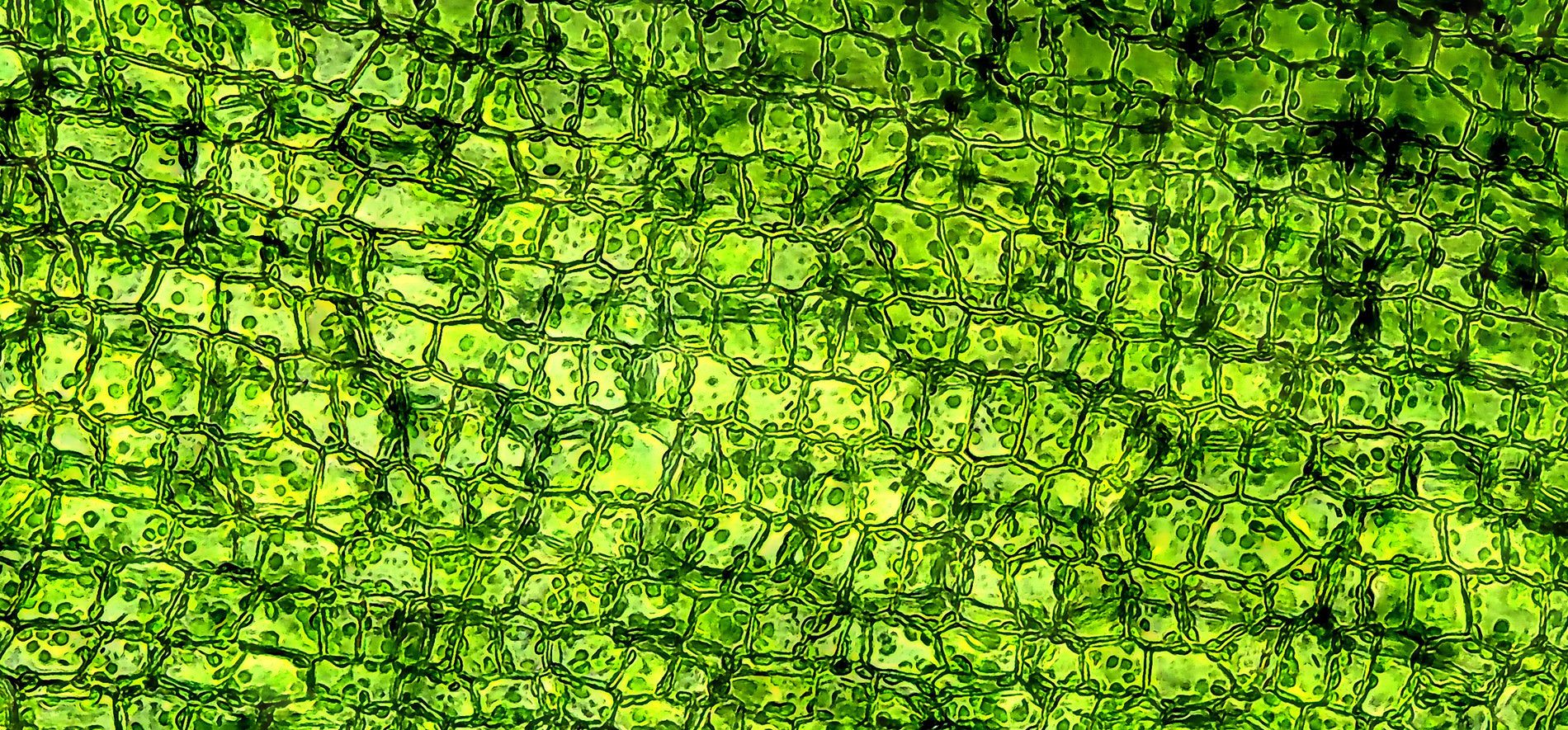

One example of a machine learning algorithm is the Artificial Neural Network. In this implementation, the machine learning algorithm attempts to replicate, in an abstract way, biological neural networks that form animal brains.

The easiest way to visualise how an artificial neural network functions is by way of example. As a “simplistic” case, imagine we have a vast number of images of different animals and we want to be able to extract all of the images that are pictures of a cat. An artificial neural network could be trained to recognise an image of a cat by trying to decide what features in an image might be “cat-like”. In a first round of guesswork, we would give the algorithm some features that we think will help it find the cats. For example we could set one or more nodes (analogous to neurons) to identify:

- triangular shapes which might be indicative of pointy cat-like

- line-like features that resemble whiskers

- four legs sticking out of a body

- a tail-like shape opposite the side you find the ears and whiskers

- curved line-like features at the ends of legs that resemble claws

The algorithm will take these inputs and pseudo-randomly decide how important each of the above features is to the image being of a cat, i.e. the weight of each feature in identifying a cat.

The neural element of this algorithm lies in the fact that you have to provide the network with a huge batch of training data. This is data where the network is told if there is a cat or not in the image, and so the final calculation weights the outputs of each node according to how they contributed to the known results in the training data. In this way, the algorithm might, for example, prioritise pointy ears and have a lower weighting on whiskers because they are more difficult to pick up from the image data.

The real power of machine learning is that you can test and retrain the data to take into account new features that additionally were not considered. For example, with the cat detection algorithm, you might retrain the algorithm to include a reasonable size range for a cat because you’ve started picking up a lot of images of lions and tigers!

Obviously, machine learning can perform many more functions than identifying cats. Voice and speech recognition, image characterisation, advanced non-linear data analysis, and even medical diagnoses are all possible using the power of machine learning.

The take home lesson of machine learning algorithms is that the quality of the output is, counterintuitively, determined by the insight and ability of the user. The provision of adequate training data, in terms of both quality and quantity, is vital for the success of a machine learning algorithm. If that hurdle is jumped, however, you could quickly find yourself with an exceptionally powerful and versatile tool for tackling problems that have previously been assumed to be impossible.

So, to answer the question opening this post “what are the machines actually learning?”: put simply, they’re learning to do whatever we want them to and they’re learning it as well as we’re enabling them to. Machine learning isn’t a magic bullet that will achieve a so-called “general artificial intelligence”. Indeed, academics already have considered the limitations of advanced machine learning algorithms with a healthy degree of scepticism, for example in this publicly available article on the arXiv. However, while such scepticism may indicate that there is a need for further research in the computer science community to push the limits of machine learning even further, it should not detract from the potentially game-changing applications of currently existing machine learning algorithms to accomplish real innovation. Instead of moving towards a dystopian or apocalyptic Terminator-like future, we’re pushing forwards at an ever-increasing speed to understand and solve more and more complex problems for the benefit of users worldwide.

This blog was originally written by Alexander Savin.

James is a Partner and Patent Attorney at Mewburn Ellis. He has a wide range of experience in patent drafting and prosecution at both the European Patent Office (EPO) and UK Intellectual Property Office (UKIPO) across a variety of industry sectors. James has particular expertise in the patentability of software and business-related inventions in Europe.

Email: james.leach@mewburn.com

Sign up to our newsletter: Forward - news, insights and features

Our people

Our IP specialists work at all stage of the IP life cycle and provide strategic advice about patent, trade mark and registered designs, as well as any IP-related disputes and legal and commercial requirements.

Our peopleContact Us

We have an easily-accessible office in central London, as well as a number of regional offices throughout the UK and an office in Munich, Germany. We’d love to hear from you, so please get in touch.

Get in touch