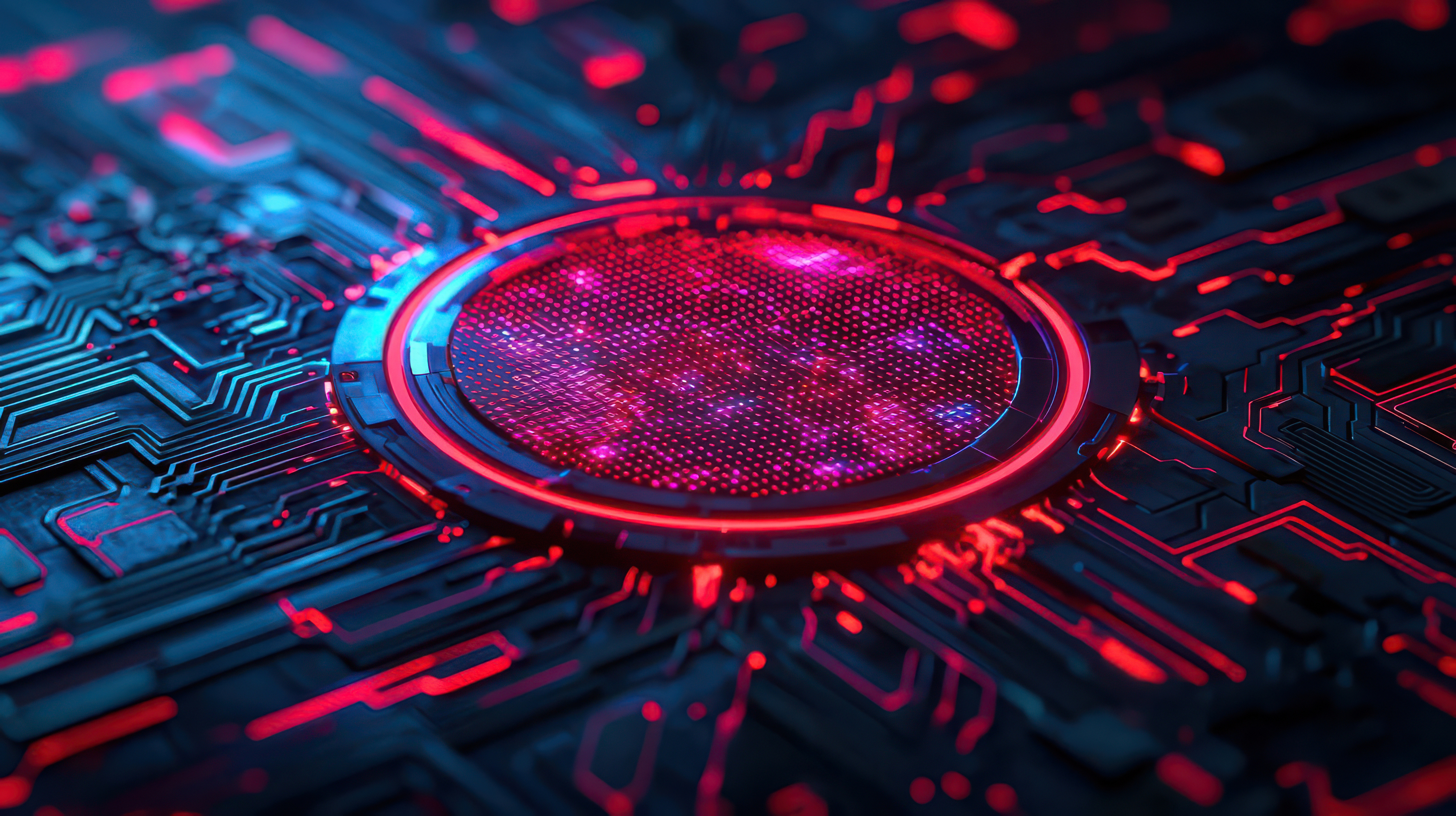

Anticipated as the next great leap in human technology, quantum computing promises to revolutionise the technological landscape, constituting a paradigm shift across a vast array of fields, from precision medicine to cryptography.

Indeed, this technological leap appears to be fast approaching, with Google claiming that ‘quantum supremacy’ was achieved by their Sycamore quantum processor in 2019, meaning it had successfully performed a computation that would take a supercomputer thousands of years. More recently, the same quantum processor performed the first quantum simulation of a chemical reaction. Despite these impressive milestones, such a processor, having a mere 54 qubits, is rendered utterly primitive in comparison to the full potential of large-scale quantum computing - yet there remains a core principle of quantum mechanics that obstructs progress; quantum decoherence.

Decoherence

Despite the common misconception that quantum mechanics only applies to microscopic particles, quantum laws govern your desk chair as equally as they do an electron. So why is our everyday experience of reality seemingly so irreconcilable with the microscopic world? The answer lies in the fact that quantum systems are tremendously sensitive to environmental noise; further, as systems increase in size and complexity it becomes increasingly difficult to shield them. Of course, the environment is also subject to quantum laws, but the inconceivably large number of interactions experienced by a macroscopic object results in an effective cancelling-out of quantum effects. To draw an analogy, consider the uniform ripples produced by a single pebble dropped into a calm lake; what if instead a thousand pebbles were dropped? Such a chaotic system would render individual ripples indiscernible – not dissimilar to the illusionary disappearance of quantum mechanics at macroscopic scales.

Consequently, as we strive to produce large-scale quantum computers, we must ensure that delicate quantum states are sufficiently protected from environmental noise; if not, computational processes will be riddled with errors. Let us take a closer look.

Qubits and error correction

As touched on in our previous blog entitled ‘Quantum computing: Why qubits have consequences for IP’, classical computers function by performing logical operations on binary units of information, ‘bits’. Importantly, classical bits take on a definite value - often represented as ‘1’ or ‘0’, but can be considered as being either ‘on’ or ‘off’. A classical computer can therefore only operate in one state at a time; accordingly, it must follow a single branch of logical operations.

In contrast, quantum-bits (‘qubits’) may exist in a peculiar ‘superposition’ of states; qubits are not constrained to either ‘on’, or ‘off’, instead they occupy a probabilistic spectrum extending between the two. As such, a quantum computer can utilise the superposition of states to perform multiple classical operations in parallel, and this computational power grows exponentially with each additional qubit. As a result, even a relatively small number of qubits are capable of handling extreme complexity. Nevertheless, introduction of error is all but inevitable when manipulating qubits.

Classically, an error corresponds to an incorrect replacement of a ‘1’ with a ‘0’, or vice versa. Such errors may be accounted for by storing multiple copies of information and checking against these copies. However, if we attempt to measure a qubit during an intermediate stage of calculation to check if an error has occurred, we risk collapsing the quantum state and invalidating any computation. In other words, the very act of measurement induces quantum decoherence due to the inevitable introduction of environmental noise by the measurement process.

Evidently, conventional error correction techniques are ineffectual in this context. Alternative error correction schemes are therefore necessary if we are to overcome the decoherence problem. Fortunately, we at least have a mathematical description of the basic standards that we are required to meet; the ‘quantum threshold theorem’ states that a quantum computer with a physical error rate below a certain threshold is, in theory, able to perform reliable computations. In essence, the theorem asserts that errors must be corrected faster than they are produced.

Looking forward

Despite the abundance of theoretical and practical challenges associated with quantum error correction, there is no shortage of proposed solutions. The problem has been tackled from almost every conceivable angle; so called ‘quantum noise-cancelling headphones’ were proposed last year, a 2-qubit system has satisfied the threshold for ‘fault tolerance’, and a recent error-correction algorithm successfully doubled the lifetime of qubits. Meanwhile, Microsoft are working towards a potentially exciting alternative known as ‘topological quantum computing’, which relies on particles that may not even exist.

This blog was written by Michali Demetroudi.

Andrew is a Senior Associate and Patent Attorney at Mewburn Ellis. He works primarily in the fields of telecoms, electronics and engineering, and specialises in quantum technologies, photonics and ion optics. Andrew has extensive experience of drafting and prosecution, global portfolio management and invention capture to secure a commercially valuable IP portfolio. He also conducts freedom to operate analyses and performs due diligence.

Email: andrew.fearnside@mewburn.com

Sign up to our newsletter: Forward - news, insights and features

Our people

Our IP specialists work at all stage of the IP life cycle and provide strategic advice about patent, trade mark and registered designs, as well as any IP-related disputes and legal and commercial requirements.

Our peopleContact Us

We have an easily-accessible office in central London, as well as a number of regional offices throughout the UK and an office in Munich, Germany. We’d love to hear from you, so please get in touch.

Get in touch